SLAM, Self-Localization and Autonomous Navigation

Tyche moves autonomously, avoid obstacle, explore the unknown space and create virtual map, estimate its own location in the virtual map simultaneously.

Hardware

1)IR sensor: to sense forward to detect object in front of tyche

2)IMU sensor:

- based on android built-in gyro sensor and tyche wheel encoder)

- to estimate robot position and orientation (2D pose) relative to the initial state.

- (good) its computation cost is very small, with good performance

- (bad) the drift error will be accumulated and it can not build space map, its blind.

3)Visual sensor:

- Extra camera module with 320×240 @30 fps image sensor, FOV should be 120 degrees

- to estimate robot pose and extract 3D features from environment simultaneously.

- (good) it create 3d point cloud from environment. The map can be created based on it.

- (bad) both computing cost and memory cost is very heavy.

Development progress

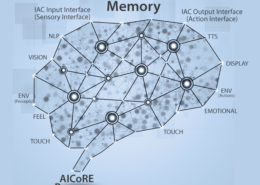

- Fuse IMU and visual data to generate 3d point clouds as sparse sub-maps.

- match and merge sub-maps / optimize the 3d point could

- Create high-level virtual map with user annotation (from verbal input or other methods)

- Application with AI

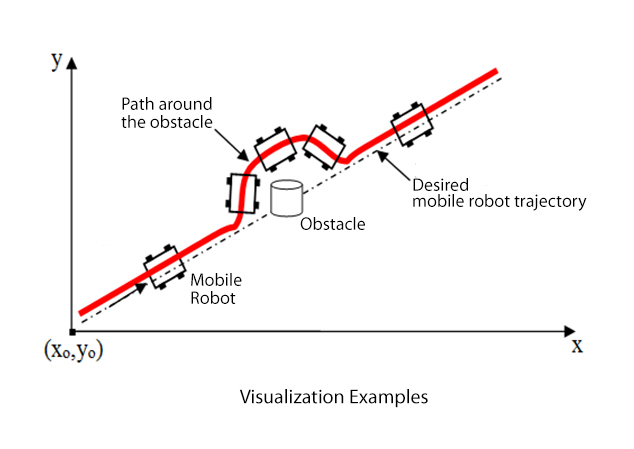

SLAM Visualization

SLAM Visualization

- Implement a 3D visualization of simultaneous localization and mapping (SLAM) based on raw sensor data provided by Tyche with Android based cellphone.

- Implements a particle filter to track the robot trajectories.

Visualize

- Sensor data

- Coordinate frames

- Maps being built

Current status: Waiting for testing with real data